Marketing plans are often evaluated in leadership reviews through the same core question: what will this drive? In familiar territory, the answers are straightforward. You know the inputs, can model return and can define in advance what success looks like.

AI breaks that pattern, not because it’s unpredictable, but because it compresses time. Ideas move to execution faster than organizations are used to managing, while the rules for evaluating value lag behind.

Individuals can see immediate gains in speed and productivity, but organizations struggle to translate those wins into something repeatable, governable and scalable. This gap — between learning quickly and proving value responsibly — is where most AI initiatives stall.

Experimentation and scale need different homes

Marketers aren’t new to experimentation. We run pilots, POCs, channel tests and creative tests constantly. In most of those cases, the experimentation is bounded. You’re testing a single variable — a new channel, format or audience. You still know what success looks like, how long to run the test and when to stop or pivot.

This is different. AI experimentation isn’t about proving a single tool, objective or tactic. It requires an upfront investment before value becomes visible. Teams have to tinker continuously — refining inputs, documenting hard-won knowledge and encoding judgment that once lived only in people’s heads. Early on, it often requires more time, not less. A human still runs the work end-to-end, watching the system closely and validating every output. From a delivery standpoint, there’s essentially no upside at first.

More importantly, this changes how people experience their work. Roles blur. Confidence is tested. Teams are asked to trust systems they are simultaneously responsible for teaching. That combination of high complexity paired with emotional friction makes this transition fundamentally different from past waves of marketing experimentation.

That’s why traditional experimentation models break. The learning curve is front‑loaded, the benefits are delayed and the work doesn’t neatly map to a single KPI. Without an explicit way to separate learning from production, teams default to one of two failure modes:

- Everything becomes an experiment with no path to scale.

- Everything is forced into production standards before learning has occurred.

An operating model answers questions technology can’t:

- Where does this kind of experimentation live?

- How much manual oversight is expected — and for how long?

- When do standards, SLAs and governance apply?

- Who owns the system while it is still learning?

Mature organizations step back and design how AI-enabled work moves from idea to impact. Instead of a one-time transformation, they treat it as a repeatable loop: experiment, harden, scale and re-evaluate. Without this separation, AI efforts stall because the organization lacks a safe place for this work to mature.

Dig deeper: Why DIY experimentation is critical to AI success

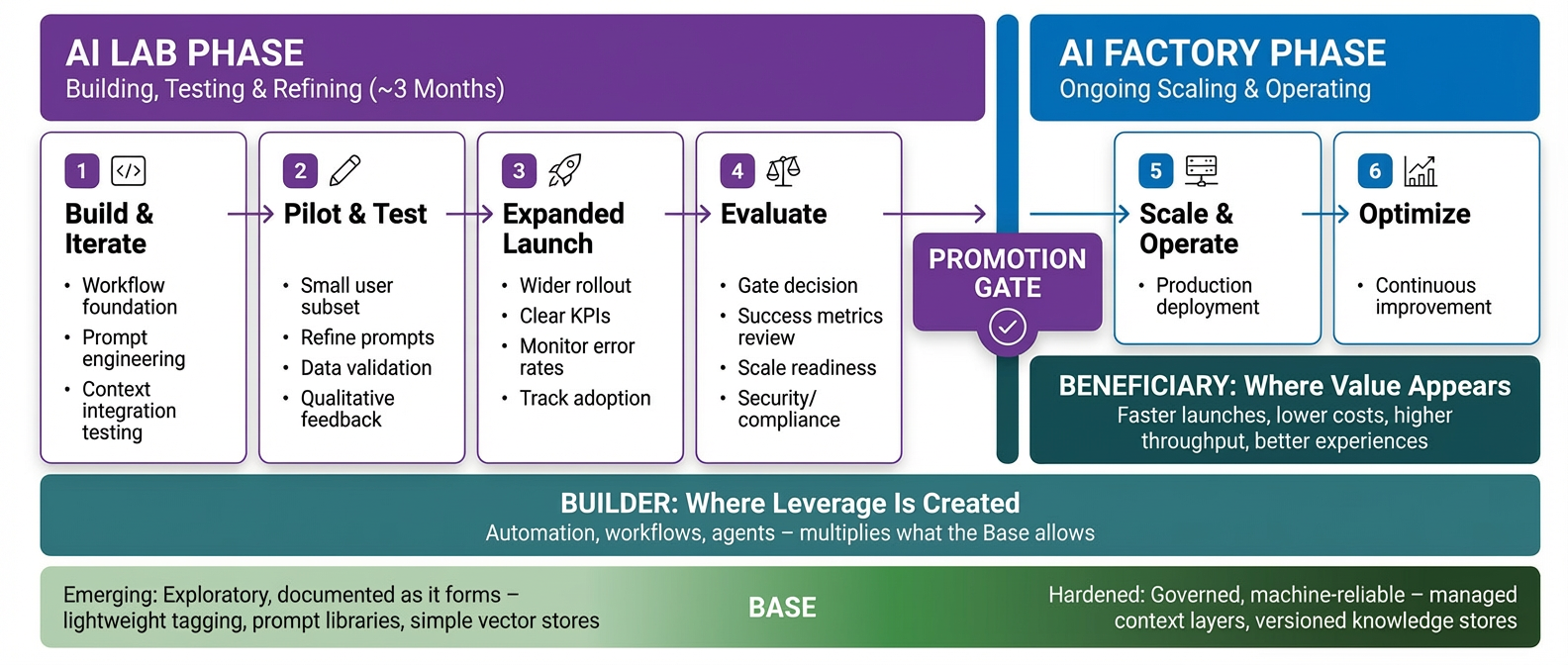

The AI lab and the AI factory: Two modes, one system

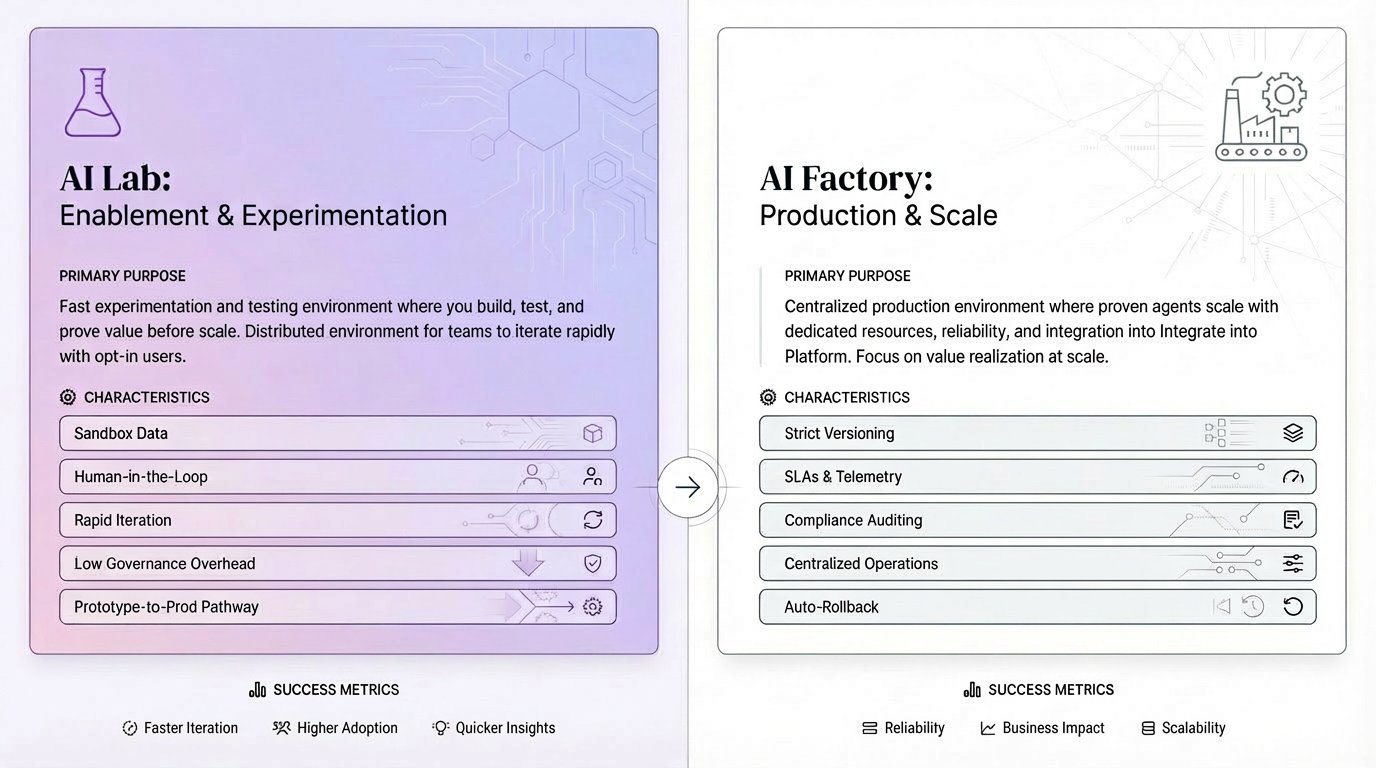

AI work is increasingly split into two connected modes: an AI lab and an AI factory. This reflects a simple reality — you can’t optimize the same work for learning and reliability at the same time.

The AI lab exists to answer one question: “Is this worth learning about?” It’s optimized for speed, discovery and insight. Labs are where teams explore what AI might do, test hypotheses and surface opportunities. The work is intentionally messy. Outputs are fragile. Humans remain deeply involved, often working alongside the machine. Success is measured in learning velocity, not efficiency.

The AI factory exists to answer a different question: “Can this be trusted at scale?” Factories are optimized for consistency, throughput and accountability. Only work that has proven value and predictable behavior graduates here. Standards tighten. Governance becomes explicit. Success is measured in reliability, cost-to-serve reduction and repeatability.

When these two modes are blurred, most AI initiatives fail. When lab work is forced to meet production standards, experimentation stalls. When factory systems are treated like experiments, trust collapses. Separating the two creates a safe path from learning to impact without pretending that either phase is quick or linear.

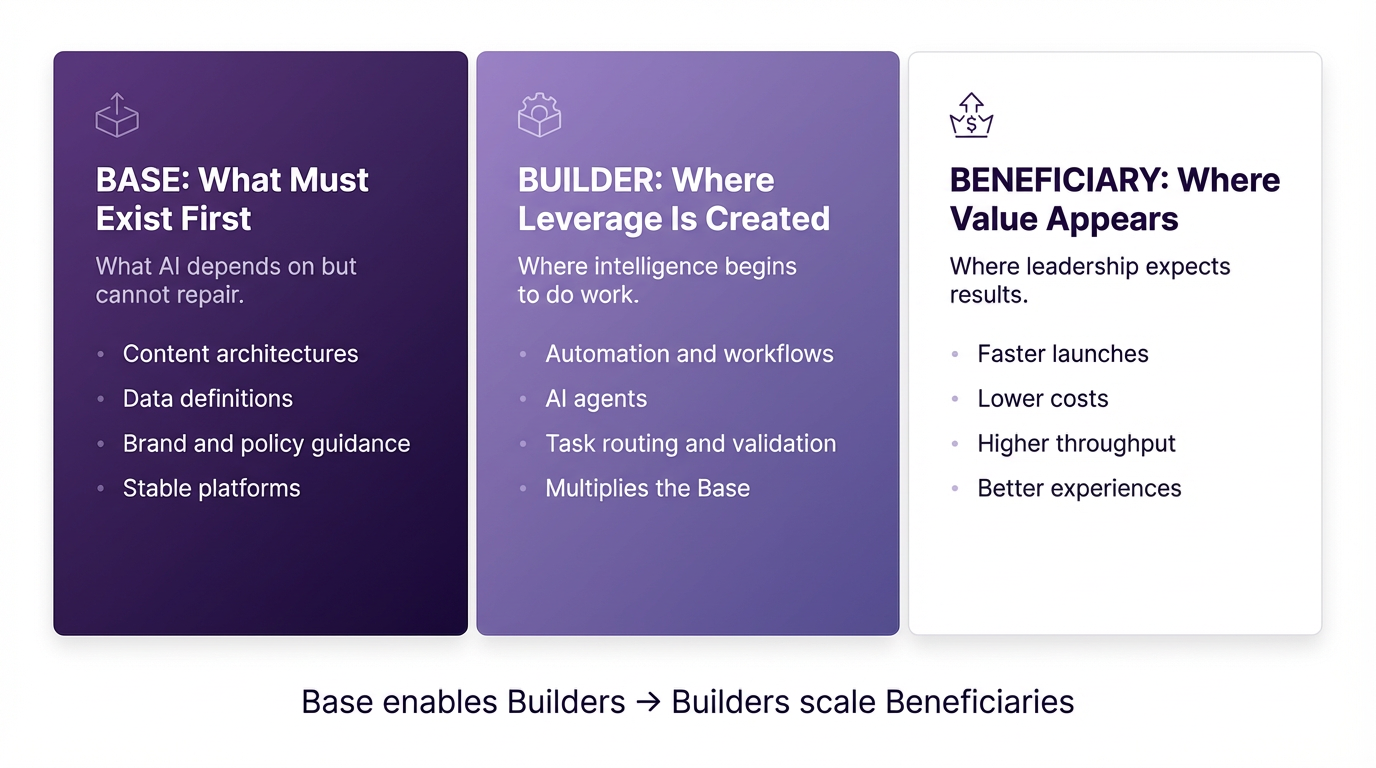

The base-builder-beneficiary model

The lab-factory split only works if teams have a shared way to understand what kind of work is happening at each stage. Without that, experimentation feels unbounded and scale feels premature.

To make AI operational rather than theoretical, teams need a shared way to distinguish what enables work, what creates leverage and where value actually shows up. The base-builder-beneficiary framework defines dependencies between types of work, not levels of maturity.

Base: What must exist first

The base includes the conditions AI depends on, including:

- Modular, reusable content architectures.

- Data at the right granularity, with clear definitions.

- Explicit brand, legal and policy guidance.

- Stable platforms and integration paths.

- Context graphs to capture decisioning logic.

When these elements are weak, AI output looks confident but behaves inconsistently. Teams end up debugging AI issues that are actually content, data or governance failures. Base work is often invisible and slow, but it determines whether AI becomes a system or a novelty.

Builder: Where leverage is created

The builder layer is where automation, workflows and agents are introduced. Intelligence begins to do work — drafting and revising content, routing tasks, validating rules and assembling outputs. Builders don’t create value on their own. They multiply whatever the base allows. But the builders are highly configurable and this is where discovery in the art of the possible explodes for teams.

Strong foundations lead to compounding gains. Weak ones create brittle workflows that break under scale. Discipline matters here. Without a clear scope, builders sprawl and systems quietly accumulate complexity.

Beneficiary: Where value appears

The beneficiary layer is where leadership expects results: faster launches, lower cost‑to‑serve, higher throughput, incremental revenue and improved customer experiences. Many teams start here, asking AI to drive growth before the base and builder layers are ready. When value fails to materialize, confidence erodes.

The principle is simple: Base enables builders. Builders scale beneficiaries. But this sequence is never finished. Teams cycle through it repeatedly as platforms evolve, data improves and expectations shift. There’s no actual long-term state, only the next version you’re actively building toward.

Dig deeper: How to level up your AI maturity from tools to transformation

The human-AI responsibility matrix

If the base-builder-beneficiary model explains what kind of work is happening, the human-AI responsibility matrix explains how responsibility is shared while that work is happening. This matters because AI work rarely fails on output quality alone. It fails when ownership, decision rights and trust aren’t aligned.

Rather than treating autonomy as a goal, enterprises are using responsibility as the organizing principle. The question isn’t how advanced the system is, but how much decision-making it should be allowed to carry and how much human oversight remains appropriate at that moment in time.

At one end of the spectrum, AI supports human-led work. Humans think, decide and act, while AI accelerates specific steps. This is where most experimentation begins and it is intentionally high-touch. At the other end, AI is trusted to decide and act within defined boundaries, while humans monitor outcomes and intervene when necessary. This mode is reserved for work that has proven stable, repeatable and low-variance.

Between these poles sit two transitional states. In collaborative modes, AI recommends and executes while humans retain decision authority. In delegated modes, humans define guardrails and policies and AI operates independently within them. Each shift represents not a technical milestone, but an increase in organizational trust.

The key insight is not autonomy for its own sake, but fit. Governance succeeds when responsibility is matched to capability, visibility and risk tolerance.

| Responsibility mode | What the human owns | What the AI foes | When this is appropriate | Common risk |

| Assist | Thinks, decides and acts | Supports individual steps (drafts, suggestions, analysis) | Early experimentation, high ambiguity, low trust | Under‑utilization, slow learning |

| Collaborate | Decides and owns outcomes | Recommends and executes with approval | Pattern discovery, repeatable tasks with judgment | Decision friction, review bottlenecks |

| Delegate | Sets guardrails and policies | Executes independently within bounds | Stable workflows, predictable variance | Over‑reach, silent errors |

| Automate | Monitors outcomes and exceptions | Decides and acts end‑to‑end | Proven, low‑variance systems at scale | Trust collapse if failures occur |

Dig deeper: The 5 levels of AI decision control every marketing team needs

How the frameworks work together

Individually, these frameworks are useful. Together, they form a practical system for moving AI work from exploration to impact. The easiest way to see how they connect is to look at them as a single operating matrix.

Mature organizations separate learning from delivery and use that separation to decide how much investment, rigor and expectation apply at each stage.

| Dimension | AI lab | AI factory |

| Primary question | Is this worth learning about? | Can this be trusted at scale? |

| Primary purpose | Exploration, discovery, sense-making | Reliability, throughput, value realization |

| Base investment | Emerging, exploratory, documented as it forms (e.g., lightweight tagging, prompt libraries, simple vector stores, early retrieval setups) | Hardened, governed, machine‑reliable (e.g., MCPs, managed context layers, versioned knowledge stores accessible to agents) |

| Builder state | Prototyped, fragile, human‑supervised (single‑agent experiments, manual handoffs, linear workflows) | Production‑grade, orchestrated, monitored (multi‑agent workflows, orchestration layers, retries, fallbacks) |

| Beneficiary status | Hypothesized only (directional estimates, time‑saved anecdotes, potential cost reduction) | Realized and measured (defined KPIs, dashboards, throughput metrics, cost‑to‑serve tracking) |

| Human-AI responsibility | Assist → Collaborate (humans validate every output, AI proposes or assembles) | Delegate → Automate (humans set guardrails, AI executes and escalates exceptions) |

| Human involvement | High-touch, humans work alongside the system | Oversight, exception handling |

| Success signals | Learning velocity, insight surfaced, failure detected early | Uptime, cost-to-serve reduction, repeatability |

| Risk tolerance | High tolerance for messiness and false starts | Low tolerance for variance and regressions |

| Common failure if misused | Forced standardization that kills learning | Experimental behavior that erodes trust |

This model makes one principle explicit: Business value only materializes in the factory.

Labs surface potential. Factories deliver outcomes. The goal isn’t to rush work out of the lab, but to ensure there’s a deliberate path from learning to trust.

Turning AI frameworks into operating decisions

Rather than three separate models, these ideas describe the same system from different angles.

- The base-builder-beneficiary model describes what must mature for value to exist.

- Human-AI responsibility describes the level of autonomy the system has at any given moment.

- The lab-factory split describes where the work belongs as that maturity develops.

Together, they give you a practical way to assess progress by asking whether each AI initiative is operating in the correct mode for its current level of maturity. If you take one thing from this article, it shouldn’t be the terminology but the concrete operating moves you can take as a leader.

Deliberately separate learning from delivery

Create explicit space for AI labs where teams are allowed to iterate, explore and learn without being held to production standards. This doesn’t require a new team or new roles. It requires clarity of intent. Make it explicit when work is exploratory, what success looks like at that stage and what will not be measured yet.

Clear a visible path from the lab to the factory

The lab only works if people know there’s a way out. Once teams have tested several approaches and patterns begin to repeat, leadership’s job is to decide what gets promoted. That promotion gate should be clear:

- Which base elements need to be strengthened.

- What builder capabilities need hardening.

- What evidence is required to justify scale.

Invest in foundations before demanding leverage

Scaling AI is less about hiring different people and more about investing differently. Early effort goes into base work — documentation, context, standards and shared understanding. Only once those foundations are reliable does it make sense to invest heavily in builder capabilities like orchestration, multi‑agent workflows and automation.

Sell outcomes at the right level

Early on, value shows up as learning and individual efficiency. At scale, it must show up as throughput, reliability and business performance. Leaders need to translate between those layers — protecting early experimentation while preparing leadership for when and how real returns will appear.

Dig deeper: How to speed up AI adoption and turn hype into results

Building safe paths from AI learning to enterprise scale

This lab-factory model isn’t a temporary transition pattern. Like the shift brought on by social platforms, it reflects a more profound change in how marketing work gets designed, executed and governed. AI isn’t just changing how customers experience marketing. It’s reshaping how marketing gets built. The leaders who win will create safe spaces to learn, clear paths to scale and disciplined ways to turn experiments into lasting advantage.

Fuel up with free marketing insights.

Contributing authors are invited to create content for MarTech and are chosen for their expertise and contribution to the martech community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. MarTech is owned by Semrush. Contributor was not asked to make any direct or indirect mentions of Semrush. The opinions they express are their own.

Fonte ==> Istoé